Significant achievements in personalization of diffusion models have been witnessed. Conventional tuning-free methods mostly encode multiple reference images by averaging their image embeddings as the injection condition, but such an image-independent operation cannot perform interaction among images to capture consistent visual elements within multiple references. Although the tuning-based Low-Rank Adaptation (LoRA) can effectively extract consistent elements within multiple images through the training process, it necessitates specific finetuning for each distinct image group.

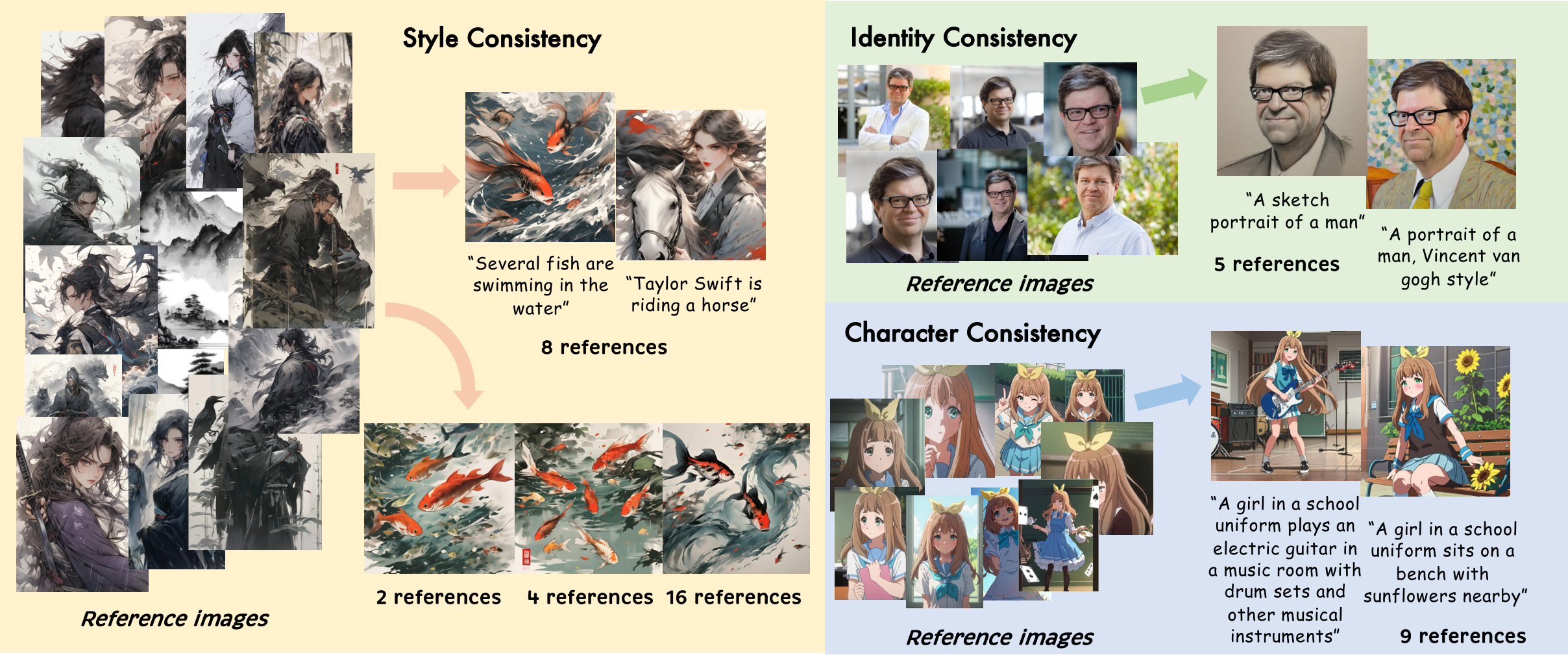

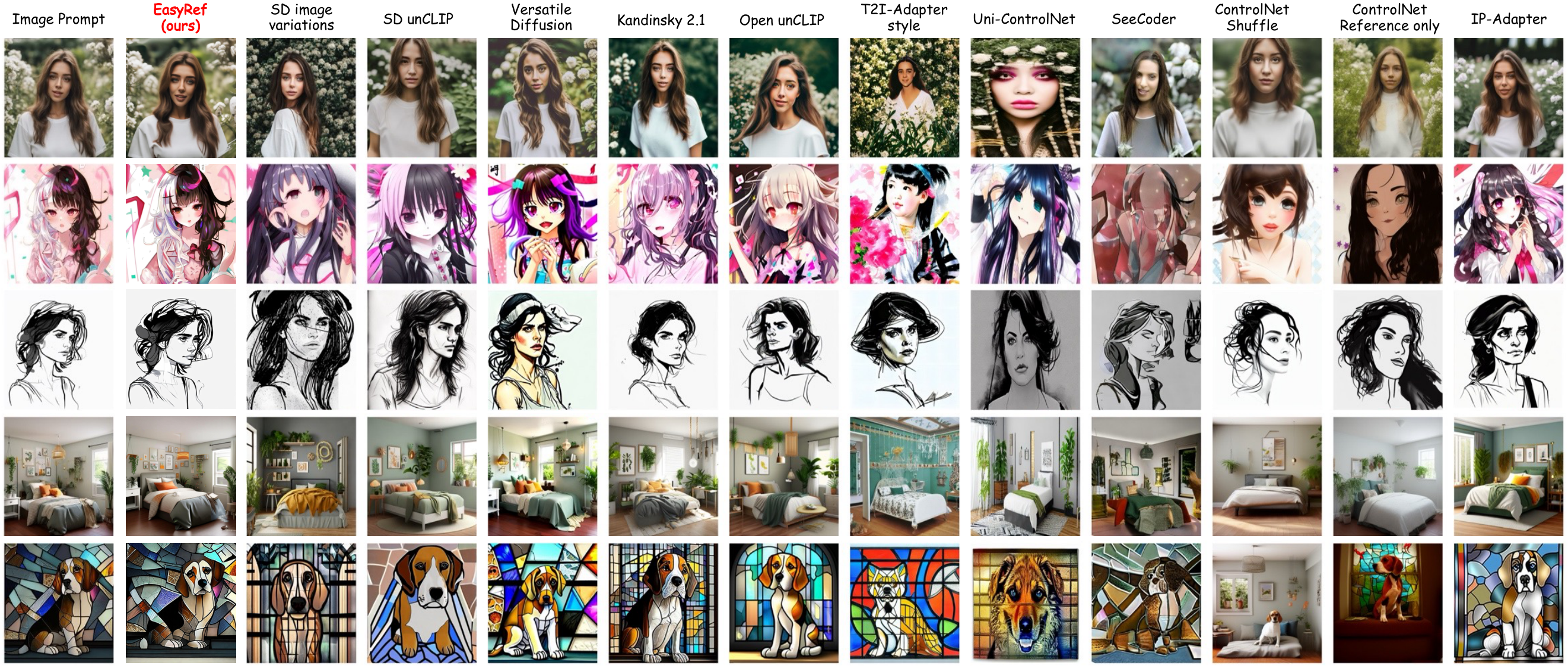

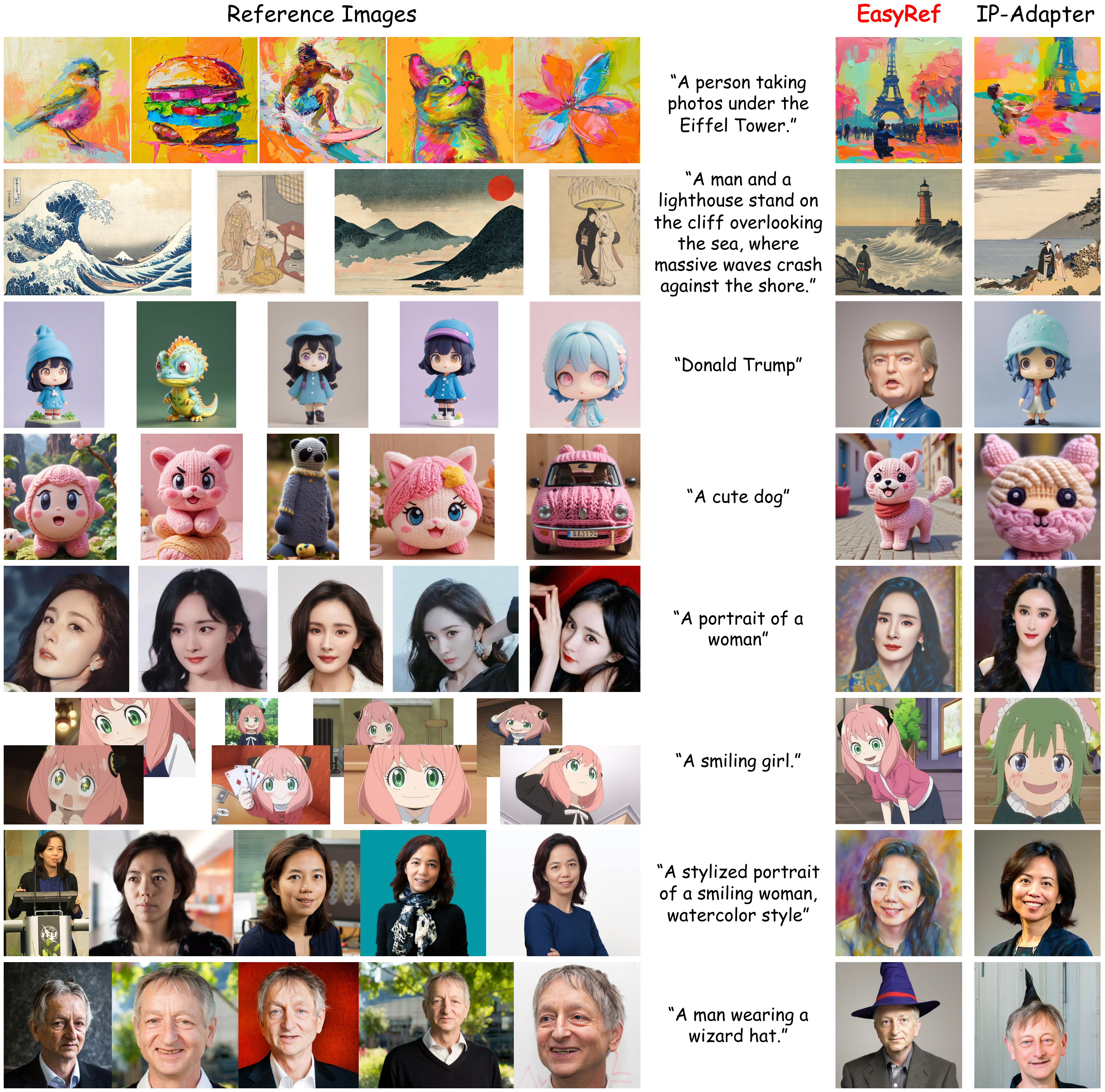

This paper introduces EasyRef, a novel plug-and-play adaptation method that enables diffusion models to be conditioned on multiple reference images and the text prompt. To effectively exploit consistent visual elements within multiple images, we leverage the multi-image comprehension and instruction-following capabilities of the multimodal large language model (MLLM), prompting it to capture consistent visual elements based on the instruction. Besides, injecting the MLLM's representations into the diffusion process through adapters can easily generalize to unseen domains, mining the consistent visual elements within unseen data. To mitigate computational costs and enhance fine-grained detail preservation, we introduce an efficient reference aggregation strategy and a progressive training scheme. Finally, we introduce MRBench, a new multi-reference image generation benchmark.

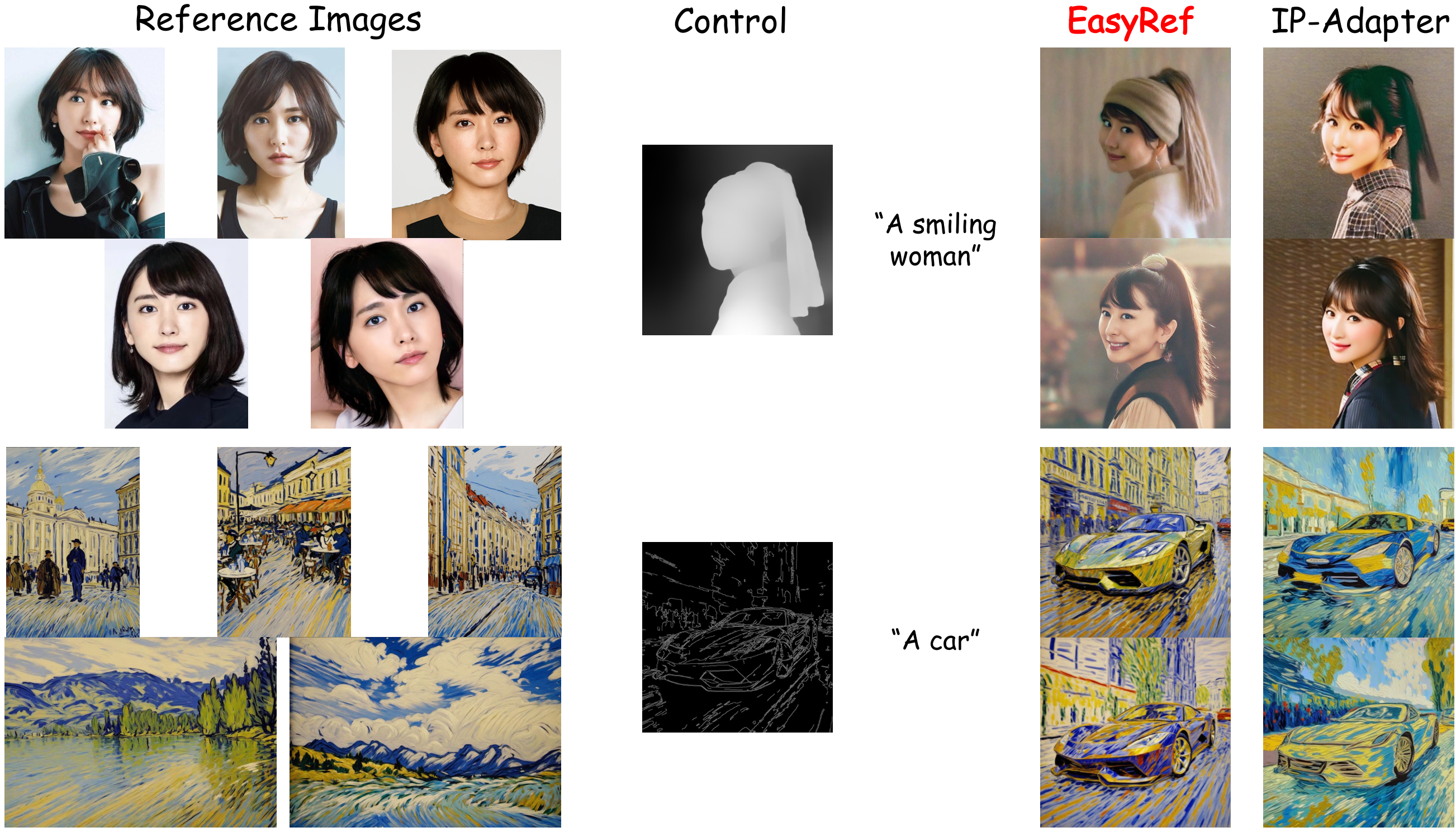

Experimental results demonstrate EasyRef surpasses both tuning-free methods like IP-Adapter and tuning-based methods like LoRA, achieving superior aesthetic quality and robust zero-shot generalization across diverse domains.

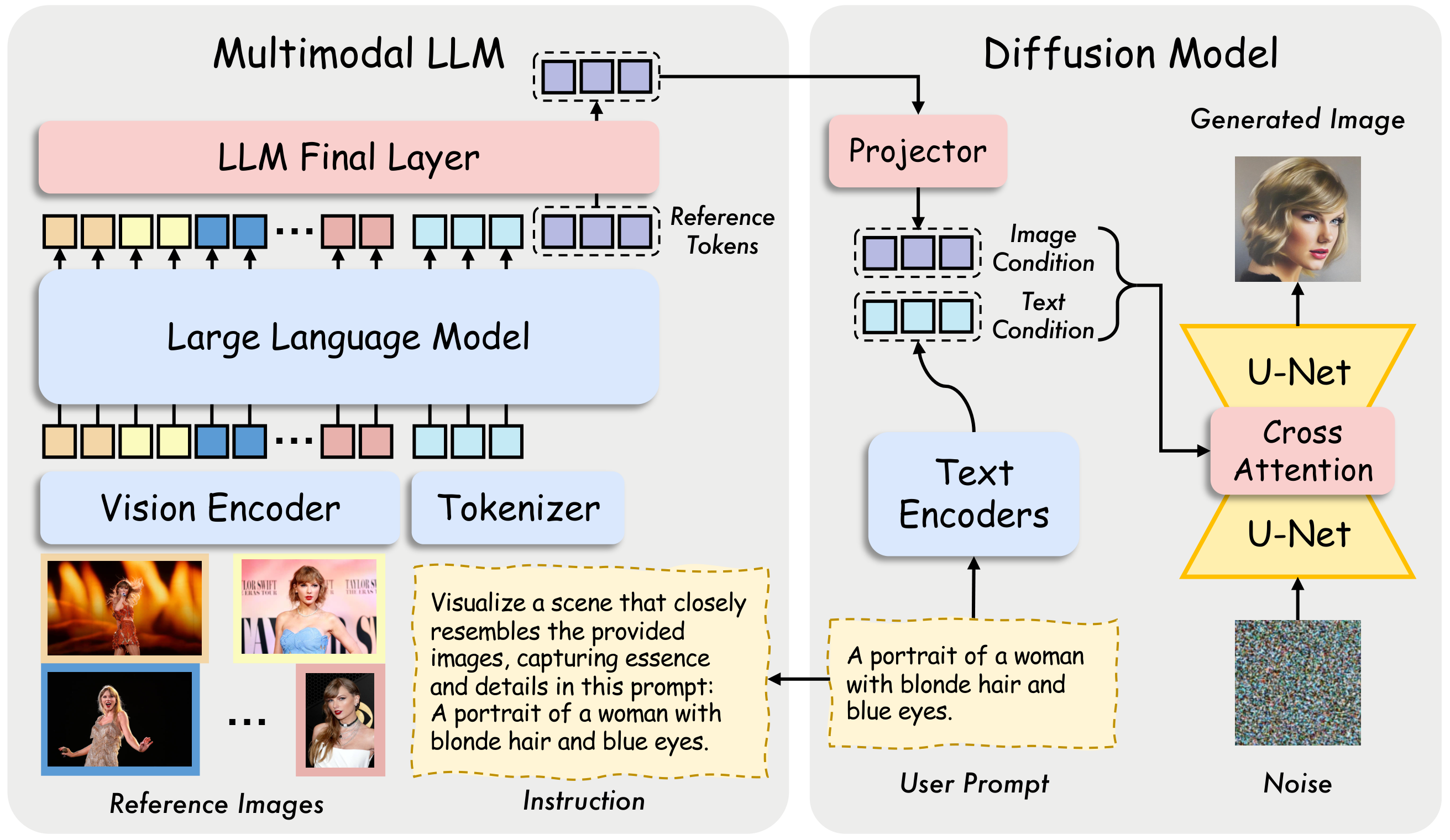

Overview of EasyRef with SDXL.

EasyRef comprises four key components: (1) a pretrained diffusion model for conditional image generation, (2) a pretrained multimodal large language model (MLLM) for encoding a set of reference images and the text prompt, (3) a condition projector that maps the representations from the MLLM into the latent space of diffusion model, and (4) trainable adapters for integrating image conditioning embedding into the diffusion process.

We propose to leverage the multi-image comprehension and instruction-following capabilities of the MLLM to encode multi-reference inputs and the text prompt based on the instruction. The MLLM consists of a large language model (LLM) and a vision encoder capable of handling images with arbitrary resolutions. The input image is initially converted into visual tokens with the vision encoder. Then we employ an instruction and integrate all images into the instruction, which explicitly encourages the MLLM to focus on the crucial and common contents within the reference images.

Increasing the number of reference images inevitably raises the number of visual tokens in the LLM. We propose to encapsulate the reference representations into 64 learnable reference tokens in the LLM to achieve efficient inference. However, all parameters of LLM must be trained to interpret these newly added tokens. To enhance training efficiency, we append reference tokens to the context sequence at the final layer of LLM, keeping all previous LLM layers frozen during pretraining. The final image conditions are injected into the pretrained diffusion model through cross-attention adapters.

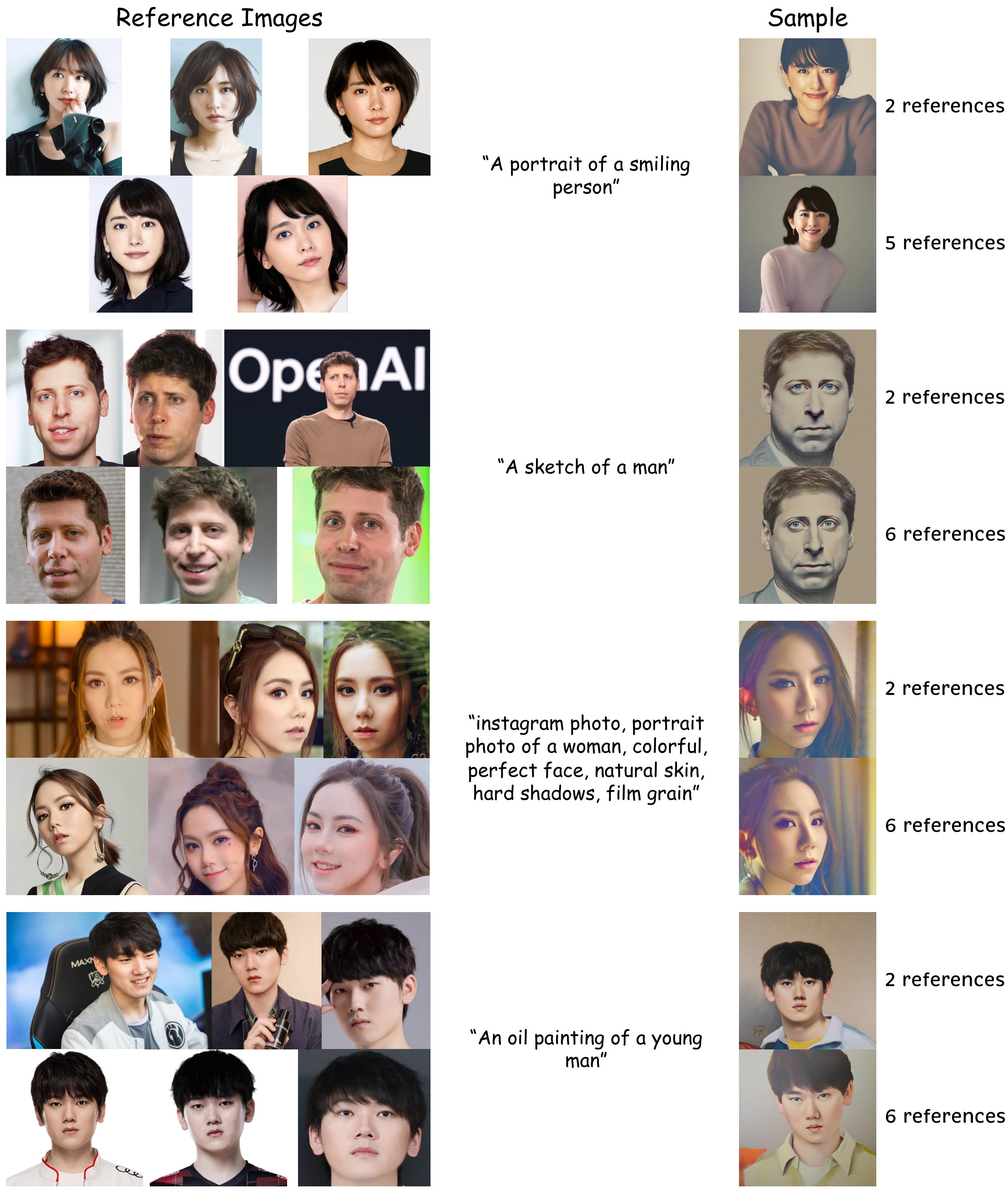

More generated samples of identity preservation with EasyRef in a zero-shot setting. We use the face images of celebrities in this experiment.

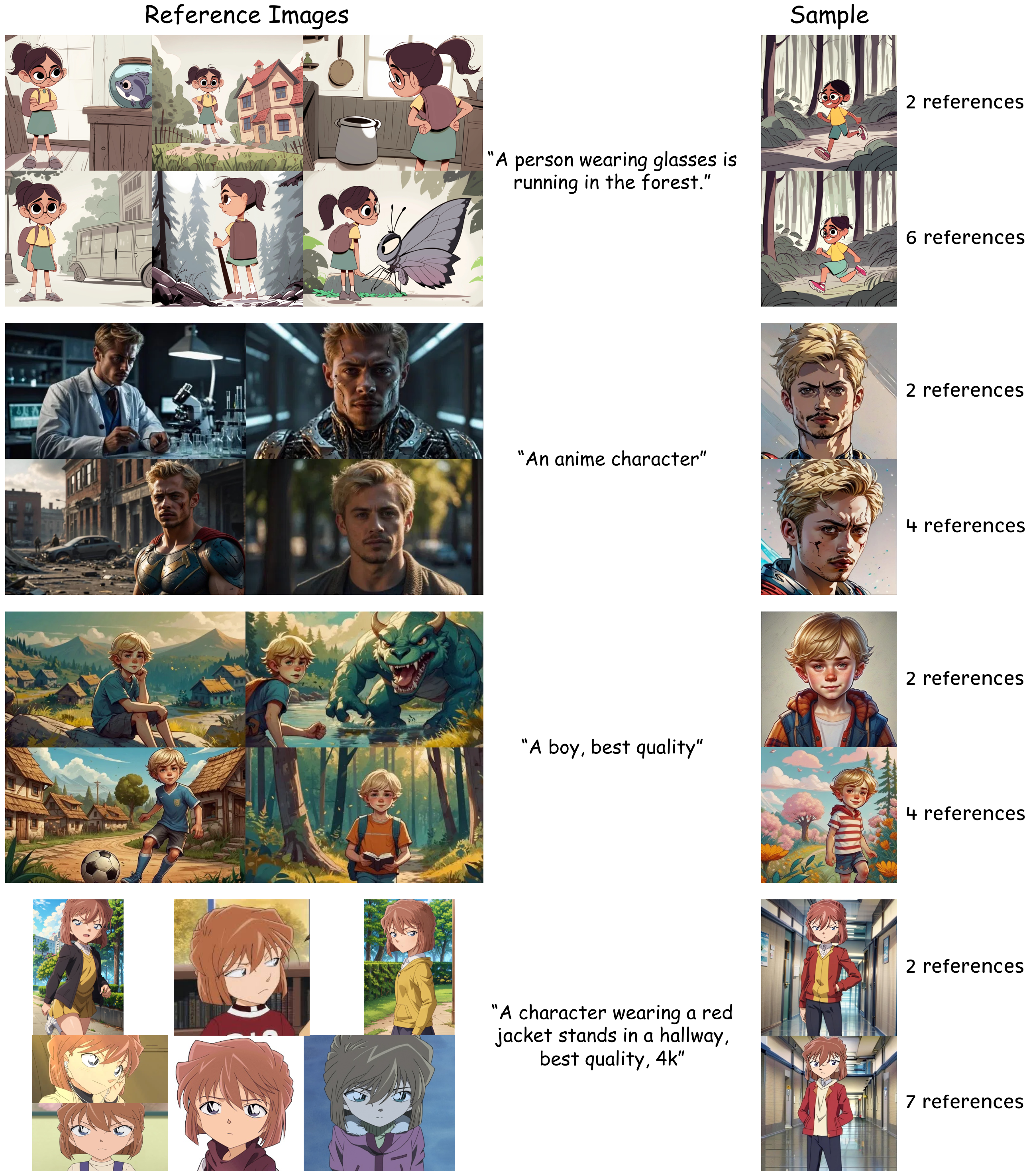

More generated samples of character consistency with EasyRef in a zero-shot setting.

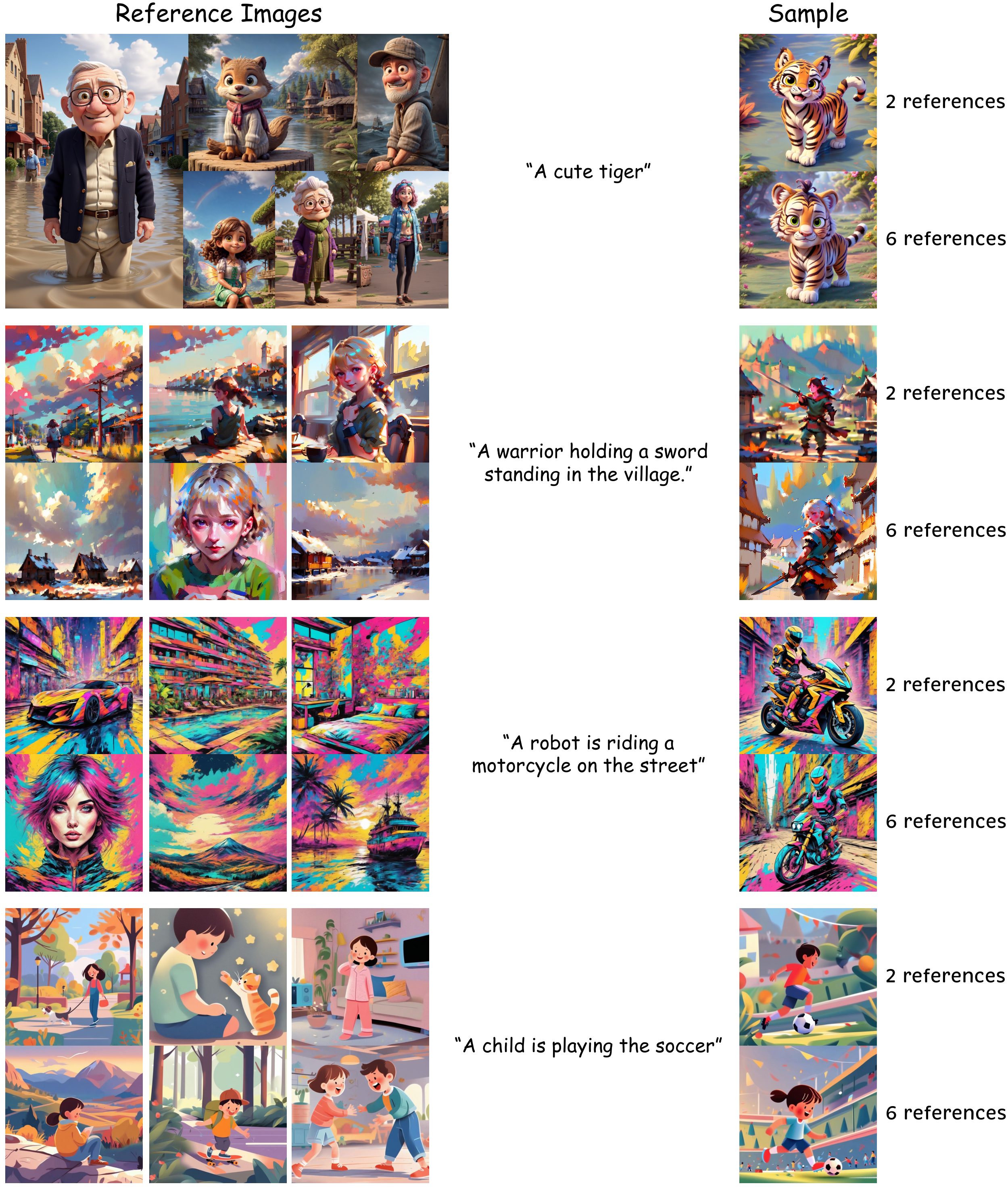

More generated samples of style consistency with EasyRef in a zero-shot setting.